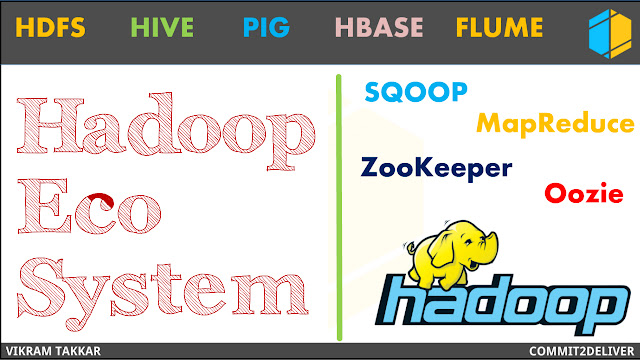

Hadoop is a framework which comprised of set of tools and technologies. They combine together to make a Eco System. Different tools can be used at different parts of projects based on its implementations and features. Hadoop ecosystem includes both official Apache open source projects and a couple of commercial tools and solutions. Some of the open source examples include Hive, Pig, Oozie and Sqoop etc. In this article we will cover open source tools which are part of Eco system. Lets start with HDFS.

Hadoop Distributed File System (HDFS)

HDFS is a storage component of Hadoop and a technology to store the data in distributed manner in order to process faster. HDFS splits the incoming file into small chunks and are stored on blocks of size 128 MB. HDFS runs on top of local file system, it is a logically created over physical storage present on the various machines in the Hadoop cluster.

HDFS is designed to tolerate high component failure rate by replicating the files over multiple machines. Further, it is designed only to handle large files as the larger the file the less it has to seeks the data into various machines. It deals with Streaming or sequential data rather than random access, sequential data means few seeks( Hadoop only seeks to the beginning block and read sequentially).

Any data which needs to be processed by Hadoop should be present in HDFS. Hence we need to ingest data into HDFS from local/remote machines before processing it.

Map Reduce

Map reduce engine is the job execution framework of hadoop. It is based on the papers published by Google. It consist of a MAP functions which processes and organizes the incoming data into a key-value pair. The Mapped data is provided to a Reduce function which further processes and aggregates the incoming data according to the task requirement and provides the final processed output.

This works the same way as - Suppose you have a book containing 10 chapters and you have to write the summary of the book. So, for this task you appointed 10 people(called Mappers) to read each chapter. When the Mappers are done reading each chapter a new person is appointed(called Reducer) to hear the content of every chapter and write the final summary of the book. This way the task is accomplished in a efficient manner. Hadoop provides flexibility in writing the Map Reduce code in numerous languages like Java, Python, Scala, Ruby,etc.

Hive

Hive is a tool in the Hadoop environment which was contributed by Facebook. It is a data warehouse infrastructure built on top of Hadoop. It was built in 2007, the basic idea behind hive was to implement the prevalent database concepts to the unstructured world of hadoop. Hive provides a boon to the developers having a hard time the Object Oriented Programming languages by implementing simple sql-like queries(known as HQL - Hive Query Language) to run the Map reduce job. Hive stores data in the form of database and tables, the data is queried and ultimately a Map reduce job is run in the background to fetch the data.

Pig

Pig is a tool developed at Yahoo, which was later contributed to the Apache foundation. It is used for creating data-flows for Extract, Transform and Load(ETL), processing, aggregating, and analyzing large data sets. Pig programs accomplish huge tasks, but they are easy to write and maintain. It uses its its own high level language, known as Pig Latin, to process the data. Pig Latin scripts are further internally translated into Map Reduce jobs to produce output.

Hbase

Hadoop core functionality is to perform only batch processing, and data will be accessed only in a sequential manner. That means one has to search the entire data set even for the simplest of jobs.

HBase is a distributed column-oriented database built on top of the Hadoop file system. Hbase is modeled over Google’s Big Table designed to provide quick random access to huge amounts of structured data. It is an open source, distributed, versioned, column-oriented, No-SQL / Non-relational database management system that runs on the top of Hadoop. It allows the Hadoop ecosystem to provides random real-time read/write access to data in the Hadoop File System. We can store the data in HDFS either directly or through HBase. Data consumer reads/accesses the data in HDFS randomly using HBase.

HBase sits on top of the Hadoop File System and provides read and write access. Data storage unit in HBase is column i.e. columns are stored sequentially in contrast to RDBMS where data storage unit is row and rows are stored sequentially. It is well suited for sparse data sets, which are common in many big data use cases. Hbase extends the capability of hadoop by providing transnational capability to hadoop, allowing users to update data records and allowing more than one value for a record(versions). Hadoop is designed for batch processing, but HBase also allows real-time processing.

Sqoop (Sql +Hadoop = Sqoop)

Sqoop is a tool used for providing interactions between Hadoop and RDBMS such as MySQL, Oracle, TeraData, IBM-DB2, MSSQL. Sqoop internally uses MapReduce jobs to import/export data to/from the HDFS to RDBMS. Sqoop can import from entire table or allows the user to specify predicates to restrict data selection. It can also directly ingest data into a hive or Hbase table.

Zookeeper

Zookeeper is a centralized open-source server for maintaining and managing configuration information, naming conventions and synchronization for distributed cluster environment. As Hadoop follows a distributed model case of partial failure may occur between different machines, in such cases zookeeper allows highly reliable coordination. Zookeeper manages configuration across blocks. If you have dozens or hundreds of blocks, it becomes hard to keep configuration in sync across nodes and quickly make changes.

Oozie

Oozie is a workflow management system for Apache Hadoop, it maintains order in which the sequence of task need to executed. For instance we have 3 jobs and the output of one is the input of another, in such a case the sequence would be fed to Oozie, which uses a Java web application to maintain the flow.

Previous Articles:

1. Introduction to Hadoop

2. Advantages of Hadoop